My Worst Day in IT: Apple Device Management Horror Stories

Working in IT means you likely have your fair share of horror stories. In a recent webinar we weren’t surprised to find that almost all of our Apple expert stories had one thing in common: an OS update that went sideways at exactly the wrong time. These five real incidents from the IT support trenches were too horrifying (and hilarious) not to share.

Keep reading to see how quickly things can break in your Apple devices, and how modern Apple device management practices can keep you out of the blast radius.

Apple Horror Story 1: “Erase and Install” on the Family Mac

One product manager’s earliest “IT job” was at home. In middle‑school at the time, their curiosity met with a Mac OS X installer. The “Erase and Install” option sounded exciting, so they clicked it.. wiping out the entire family’s Mac in just one shot. Years of family data disappeared because there were no safeguards and no one understood what that installer button really did. It was a formative “worst day in IT” experience that perfectly illustrated how a single, uninformed decision can become a catastrophic data‑loss event.

What went wrong:

- No separation between “power user” controls and normal usage.

- No backup strategy for critical personal data.

- A destructive workflow (“erase and install”) looked just as friendly as the safe option.

How to prevent this with Apple MDM & modern tools:

- Lock down OS install flows on managed devices so users cannot choose destructive paths.

- Use zero‑touch deployment on all devices so users don’t need to run full installers.

- Enforce backup and snapshot policies for critical endpoints so you can recover from mistakes.

Takeaway for Apple admins:

Treat every Mac like that family computer: assume someone will eventually click the most destructive button available. Use policy, role‑based access, and guided update workflows to keep end users on rails instead of relying on their ability to interpret installer jargon.

Apple Horror Story 2: The Professor Who Beat Every Control

In a university environment, Lion (10.7) launched right at the start of the semester. The IT team did what many of us have done: they blocked upgrades via the App Store and tried to standardize on a stable macOS version for the entire term. However, one graphic‑design professor still managed to bypass the protections—possibly with an installer on a USB stick—and upgraded anyway. The result: broken printing, failed directory binding, and a live classroom running on a half‑functional machine. The admin spent an incredible amount of valuable time untangling the damage from one unauthorized upgrade.

What went wrong:

- Reliance on “soft” App Store controls that didn’t cover all upgrade paths.

- No hard enforcement tying role/department to allowed OS versions.

- No quick remediation path when a device went rogue on the OS level.

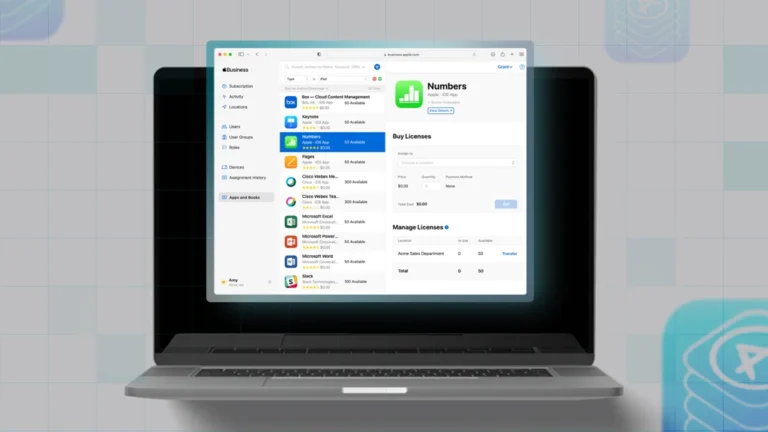

How to prevent this with Apple MDM & modern tools:

- Use strict, role‑based OS version policies: professors, labs, and executive machines should be pinned to tested builds.

- Enforce upgrades only via managed mechanisms (e.g., MDM‑driven workflows, declarative updates), and block unmanaged installers.

- Continuously monitor OS versions and flag any device that deviates from policy for immediate remediation.

Takeaway for Apple admins:

Keep this story in your back pocket to explain why certain updates are NOT advisable. Change control for OS versions should be as strict as change control for core services. Use your management platform to define who can be on which macOS version, enforce it declaratively, and surface outliers instantly instead of discovering them when someone’s mid‑lecture and nothing prints.

Apple Horror Story 3: The Mac Bricked Before Class

Another university story: a professor’s Mac had just been carefully updated by IT and was in a good, supported state. Early one morning, he called in a panic—the machine was now effectively bricked. After the visit from IT, he’d tried to “fix” or update things himself and managed to wipe or break the computer.. right before he needed it for teaching.

The admin rushed in around 6 a.m. and spent roughly 35 minutes trying to rebuild the device and reinstall specialized software under time pressure. No one could fully explain how he broke it that badly, but the result was the same: an emergency rebuild during prime teaching hours.

What went wrong:

- No clear, supported path for the user to request or trigger updates safely.

- Too much freedom to attempt OS‑level fixes or reinstalls without guardrails.

- No fast roll‑back or “known good” baseline to revert to when something went wrong.

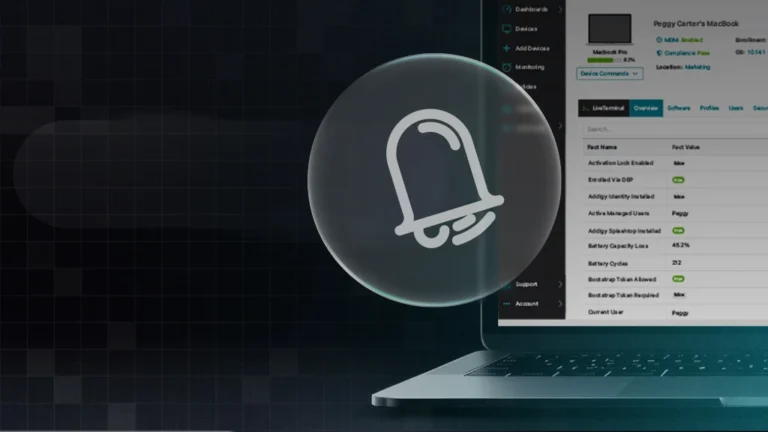

How to prevent this with Apple MDM & modern tools:

- Provide a managed, self‑service path for updates and upgrades so users never need to grab full installers or run recovery workflows on their own.

- Restrict OS reinstall and low‑level tools on production Macs (and keep those flows IT‑only)

- Maintain standardized, automated rebuild workflows so a device can be returned to a “known good” state quickly, with required prebuilt apps and settings.

Takeaway for Apple admins:

If users feel like the fastest way to get help is to “fix macOS themselves,” the environment is too open and the support process is too opaque. Give them a safe, guided way to stay current and make sure your rebuild process is so automated that even a worst‑case morning emergency doesn’t derail the day.

Apple Horror Story 4: The 10.6 to 10.7 Server Migration Dumpster Fire

One speaker described a brutal migration from a 10.6.8 server to 10.7. What should have been an upgrade turned into, in their words, a “relentless garbage fire.” Core services didn’t transition cleanly, directory services misbehaved, and an entire office was effectively offline for the better part of a few days. The upgrade had been treated more like a one‑time jump than a carefully staged infrastructure change, and the cost was extended downtime.

What went wrong:

- Insufficient staging and testing of the new platform before production cutover.

- No phased rollout or parallel environment to fall back to.

- Underestimation of how deeply OS changes impact dependent services.

How to prevent this with Apple MDM & modern tools:

- Always test major OS transitions on a small, representative group of devices before broad rollout.

- Use segmentation (by site, department, or role) to stage updates and monitor impact step by step.

- Ensure you have a roll‑back or contingency plan, including backups of configs and data, before touching core services.

Takeaway for Apple admins:

Treat major macOS and server‑side shifts like any other infrastructure migration: test, stage, monitor, then roll out. Use your management platform to build pilot rings, observe real‑world behavior, and only then promote new versions to the wider fleet. A bad day is inevitable if your first test is “the entire office.”

Apple Horror Story 5: The Student Who Deleted 2,000 SCCM Packages

On the Windows side (but the lessons still apply to macOS too) a student worker with access to the SCCM 2007 console clicked the wrong thing and deleted the entire distribution point. Over 2,000 deployment packages, scripts, and installers made up by years of slow, careful IT work.. disappeared in one click. Restoring from backup took about three days, during which time the university’s software deployment capability was severely impaired. It became the kind of story admins still tell as a warning: one mis‑scoped account can erase years of effort.

What went wrong:

- No junior or temporary staff member should have that level of privilege.

- No safety rails around destructive operations in the management system.

- Recovery was possible, but slow and painful, highlighting gaps in backup and change processes.

How to prevent this with in a macOS world with Apple MDM & modern tools:

- Apply least‑privilege access: limit who can create, modify, or delete high‑impact content like packages and policies.

- Require approvals or reviews for destructive changes, especially deletions that affect many devices.

- Ensure regular backups and export of critical configurations so you can recover the management layer as well as endpoints.

Takeaway for Apple admins:

Your management platform is just as critical as the devices it controls. Guard it with strong role-based access controls, audit trails, and regular configuration backups. One mistake in your console should never be able to erase your entire deployment strategy for days at a time.

Turn Your Worst IT Days into a Safer Future with Apple MDM

Your worst day in Apple IT rarely comes from exotic zero‑days or obscure bugs. They come from predictable failure modes – confusing installers, weak controls, untested upgrades, over‑permissive access – and from humans under pressure. Since we’ll always be under pressure, the only thing you can control is how you manage your fleet.

The opportunity for Apple‑focused MSPs and IT leaders is to turn those painful experiences into concrete practices: declarative, automated updates; strict version policies; safe self‑service; least‑privilege access; and tested, repeatable rollouts for every Mac, iPhone, iPad and TV.

Addigy’s Apple‑native MDM platform is built to help you put those practices into action: automating device enrollment, tightening OS update control, and giving you the visibility to spot problems before they become the next horror story. The more of that you build into your environment, the fewer horror stories you’ll have to fear (or live through again)!